The first principle is that you must not fool yourself — and you are the easiest person to fool.

— Richard Feynman, 1974

In most cases, benchmarking is a kind of performance investigation. Benchmarks allow getting new knowledge about software and hardware. This knowledge can be used later for different kinds of performance optimization.

Once you get the desired level of performance, you usually want to keep this level. And you typically don’t want to have situations when someone from your team accidentally spoils your performance improvements. How can we prevent such situations? Well, how do we usually prevent situations when someone spoils our code base? We write tests! If we don’t want to have any performance regressions, we need performance tests! Such tests can be a part of your CI pipeline, so it will be impossible to make any unnoticed performance degradations!1

So, it looks simple: we write performance tests and get profit! Sounds good, doesn’t it? Unfortunately, it’s harder than it sounds. In performance tests, it’s not enough to just measure performance metrics of your code; you also have to know how to process these values. A benchmark without analysis is not a benchmark, it’s just a program that prints some numbers. You always have to explain the benchmark results.

When you run a benchmark locally, you have all the relevant source code under your hands: you can read it, you can play with it. You can do additional actions depending on the current state of the investigation. You can look at the current data and make a decision about the next step. When a benchmark becomes a performance test, you should automate this process. This is much harder because the automation logic should handle future changes to the source code. You don’t know the future, you don’t know the performance metrics that you will get tomorrow, you can’t look at the future distribution plots, and you can’t make nonautomated decisions about future problems. Everything should be automated! And this is a huge challenge: you have to predict possible problems and write algorithms for analysis without knowledge of the data. You should design not only a set of benchmarks, but also a set of performance asserts and alarms that should notify you in case of any problems.

Performance testing goals

What problems do we want to solve? What exactly do we want when we are talking about performance tests? We should clearly understand our goals before the start; we should understand what we want to achieve.

Kinds of benchmarks and performance tests

There are a lot of different kinds of performance tests. You should decide what your test should look like and what exactly it should measure. For example, it can be a stress test that checks what’s going on with your web server under high load. Or it can be a user interface test that checks that UI controls are responsive and work without delays. Or it can be an asymptotic test that verifies that the algorithmic complexity of a method is O(N). Or it can be a functional test that measures the latency of a single operation. Knowledge of these kinds allows you to choose how to write performance tests in each situation.

Performance anomalies

The duration of a test is not a single number; it’s always a distribution. Sometimes, this distribution looks “strange.” For example, it can be multimodal, or it can have an extremely huge variance. We say that distributions of “unusual shape” are performance anomalies. It’s not always a problem, but hunting for performance anomalies can usually help you to find many problems that you can’t find in another way.

Strategies of defense

When should we run our performance tests: before or after the merge into the main branch in a version control system? Should we run performance tests per each commit or it will be enough to run it once per day? How much time should we spend on performance testing and what kind of degradation could we detect in each case? Can we implement completely automatic CI logic? Or do we always have to do things manually? What can we do if a product with performance problems has already been released? There are different strategies of defense from performance degradations: each of them has advantages and disadvantages, and each of them helps you to solve a specific set of problems.

Performance space

For each test, you can collect many metrics. You can measure the total wall-clock time, and you can check out the hardware counters or the number of GC collections. You can collect these metrics only from a single branch or from several branches. There are a lot of ways to get performance numbers, and you should know about them because this knowledge will help you choose which of them will work best for you.

Performance asserts and alarms

Everything is simple with functional tests because they are usually deterministic. If you don’t have tricky race conditions, a test always have the same result. It’s clear when a test is green; depending on your requirements, you can easily check it with a series of assertions.

In the case of performance tests, everything is more complicated. Remember that a test output is a series of numbers; you have new numbers per run even on the same machine. Moreover, in some cases, you have to compare data from different machines. The standard deviation can be huge, so it can be hard or even impossible to detect 5-10% degradation. It’s very important to define your alarm criteria and answer a simple question: “When is a test red?”

Performance-driven development (PDD)

This approach is similar to test-driven development (TDD) with one exception: instead of the usual functional tests, we write performance tests. The idea is simple: you shouldn’t start to optimize anything before you write corresponding performance tests that are red. Indeed, it sounds simple, but it’s a very powerful technique; it will help you to save a lot of time and nerves.

Performance culture

Unfortunately, performance tests will not work well if members of the team don’t care about performance. You need a special kind of culture in your team and your company. Not only is performance testing about technologies; it’s also about attitude.

There is no universal approach that allows getting a performance testing system for free in any project. The best approach for you depends on your performance requirements and on CI/human resources. In this chapter, we will learn basic information about performance tests that will help you to understand which practices can be helpful for your projects and your team.

Many examples in this chapter are based on development stories about IntelliJ IDEA, ReSharper, and Rider. I will mention these projects without additional introductions.

Let’s start with performance testing goals!

Performance Testing Goals

In the modern world, we often release new versions of our software. We are trying to fix old bugs and implement excellent new features. Sometimes, though, these new features do not work as well as expected. However, this is a normal situation: it’s tough to write new code without introducing new problems. That’s just how it works. Hopefully, your users understand this and will wait for a new version with fixes. However, in many cases, it’s almost inexcusable when you’re breaking old features or make them slow. As a performance engineer, the worst user feedback I ever get was like: “The new version of your software works so slowly that I have to roll back to the previous version” or even “I have to switch to the product of your competitors.” Sometimes we have performance degradations—this is the problem that we are going to solve in this chapter. We have defined the problem, and now it’s time to define the goals!

Goal 1: Prevent Performance Degradations

This is our primary goal: prevent performance degradations . Some developers may confuse this goal with “make software fast” or “make users happy with our performance.” Be careful! When we say “prevent performance degradations,” this is not about the overall level of performance or the happiness of our users. “Prevent performance degradations” means that each version of our software should work as fast as or faster than the previous one.

Remark 1. Programming is always about trade-offs; we can’t constantly improve the performance of all features in our program. Sometimes we have to slow down one part because we want to speed up another part (e.g., we spend time on loading caches on startup, which allows fast request processing in the future). This trade-off can be a conscious decision, and it’s completely OK. However, in most cases, developers slow down features accidentally. In large programs, it’s tough to measure performance impact on the whole product even for small changes. Thus, our goal actually sounds like this: prevent accidental performance degradations.

Sometimes even 1% degradation can be a huge problem.

An example: Let’s say we have a web server that processes requests. We host this server in the cloud, and we pay a cloud provider for the time resources at a fixed rate. In our spherical example in a vacuum, each request always takes 100 ms. 1% degradation means that we will get 101 ms per request after a deployment. If we have billions of such requests, the total processing time will increase noticeably.2 The most important thing is that our bills will also increase by 1% .

Sometimes even 500% degradation can be not a problem.

An example: We have a server that displays statistics about user activities. Let’s say that we don’t need real-time statistics; it’s enough to refresh it daily. So, we have a console utility that regenerates a statistic report and deploys it. With the help of cron,3 we run it every day at 02:00 AM. The utility takes 1 minute, so the report is ready at 02:01 AM. A developer from your team decided to implement additional “heavy” calculations: now the report contains new useful information, but the total generation time is 6 minutes; the report is ready at 02:06 AM. Is this a problem? Probably not, because analytics will review the report only in the morning. If the utility takes 10 hours, it can be a problem, but nobody cares about five extra minutes in this case.

Sometimes it’s impossible to talk about degradations in terms of percentages.

An example: Because of a complicated multilevel hierarchical cache, 20% of requests take 100 ms, 35% of requests take 200 ms, and 45% of requests take 300 ms. After some changes, 20% of requests take 225 ms, 35% of requests take 180 ms, and 45% of requests take 260 ms. Is this a good change or a bad change? Do we have a performance regression in this case? (Try to calculate the average processing time for both cases.) Well, this is another trade-off problem: we can’t answer this question without business requirements.

We will discuss different performance degradation criteria in the “Performance Asserts and Alarms” section.

Remark 3. In large software products, it’s very hard to prevent all possible performance degradations. “Prevent all performance degradations” sounds like “prevent all bugs” or “prevent all security vulnerabilities.” Theoretically, it’s possible. In practice, it requires too many resources and too much effort. You can write thousands of performance tests, and you can buy hundreds of CI servers that run these tests all the time. And it will help you to catch most problems in advance, but probably not all of them. Also, some performance degradations may not affect the business goals, so doesn’t always make sense to fix them. Thus, when we say “prevent all performance problems,” we usually mean “prevent most of them that matter.”

Goal 2: Detect Not-Prevented Degradations

Since it’s almost impossible to prevent all performance degradations, we have a second goal: detect not-prevented degradations . In this case, we can fix them and recover the original performance. Such problems can be detected on the same day, in the same week, in the same month, and even one year later. We will discuss what kinds of problem we can detect in different moments in the “Strategies of Defense” section. The most important thing here is that we want to detect these problems before users/customers find them and start to complain about them.

Goal 3: Detect Other Kinds of Performance Anomalies

Degradation is not the only problem we can get. In this chapter, we will discuss so-called “performance anomalies,” which include clustering, huge variance, and other kinds of “strange” performance distributions. Usually (but not always) such anomalies help to detect different kinds of problems in the business logic. If you implement a system for performance analysis, it makes sense to check the performance space for these anomalies as well. One cool thing about it: some anomalies can be detected in a single revision, so you don’t have to analyze the whole performance history or compare commits.

Goal 4: Reduce Type I Error Rate

If you skipped the chapter about statistics (Chapter 4), I will explain this goal in simple terms. A Type I error (false positive result) means that there is no performance degradation, but performance tests detect “fake” problems. Consequences: developers spend some time on investigations in vain. This is not just a waste of our most precious resource (time of developers), it’s also a substantial demotivating factor. Having a few Type I errors per month is OK. Moreover, you should expect to have such errors; it’s too hard to implement an excellent performance testing system with zero Type I error rate. However, if you get several false positive results per day, developers will not care about it. And it sounds reasonable: what’s the point to spend time on useless investigations each day? You can have “real” problems among the “fake” problems, but you will miss them: developers will ignore all alarms because they are likely false alarms. The whole idea is destroyed: performance tests do not benefit and instead distract your team members.

Thus, you should monitor Type I errors. If you have too many of them, it makes sense to reduce performance requirements and weaken the degradation criteria. It’s better to miss a few real problems than to have a completely useless set of performance tests.

Goal 5: Reduce Type II Error Rate

Type II error (false negative result) means that there is performance degradation, but we failed to detect it. Consequences: serious performance problems can be delivered to users with the next update. In this case, we didn’t solve our main problem; we didn’t prevent degradation. Since it’s impossible to prevent all performance degradation, we can try to keep the number of such situations low.

Evaluation of the effectiveness of performance tests

Detection of weaknesses and pieces of code that should be covered by additional performance tests

If you detected many problems in time, it will encourage the team to write new performance tests

If you didn’t have any significant issues (both detected and nondetected), you probably don’t need performance tests for these projects, and it doesn’t make sense to invest time into it in the future.

Goal 6: Automate Everything

It’s not easy to formulate proper degradation criteria and get low Type I and Type II error rates. Sometimes you may be tempted to monitor performance manually instead of writing a reliable system for performance tests. For example, performance tests can produce thousands of numbers that are aggregated and displayed in a monitoring service. Next, you (or one of your colleagues) check performance reports every day, manually look for problems, and notify the rest of the team of the results. This is not a good approach because there are always many problems with the human factor: the person who is responsible for monitoring can be sick, on vacation, or busy. In this case, we will not get any alarms even if we have essential problems. In addition, he or she can miss some dangerous problems due to inattentiveness.

Automatic reports

You can generate a full report about the problem automatically. Such a report could include links to the commits (if you have a web service that allows browsing your code base), a list of authors of these changes, performance history of this test, links to other tests from the same test suite with new performance problems (they can be related), and so on. The main idea here is that the analyst shouldn’t look for additional data; all necessary information should be collected automatically. You can even automatically create an issue in your issue tracker and easily track all performance problems.

Automatic bisecting

It’s not always possible to run all performance tests for each commit. Imagine that one of your daily performance tests is red and there are N=127 commits in this day by ten different people. How do you find the commit that introduces the problem? It’s a good idea to start to bisect these commits. Let’s check the commit 64 (for simplification, assuming that we have a linear history without branches). If the test is red, it means that the problem was introduced before this commit, and we are going to check commit 32. If the test is green, it means that the problem was introduced after this commit, and we are going to check commit 96. If we continue this process, we can find the commit with problem after log2(N) iterations (in the perfect world without branches). Manual bisecting is a waste of developers’ time. This process can also be automated: the report should include the specific commit and the author of this commit (this person should start to investigate the issue).

Automatic snapshots

One of the first steps in such investigations is profiling. Once we get a slow test, we can automatically take a performance snapshot before and after the change. In this case, the analyst can just download both snapshots and compare them. It can allow finding the problem even without the need to download the sources and build it locally: many stupid mistakes can be found only with the snapshots.

Automatic step-by-step analysis

If you have a 1-minute degradation in a huge integration test, you probably have a problem in a single subsystem instead of a project-wide problem. In this case, you can measure separate steps for both cases and compare them automatically. After that, a notification (or an issue) can contain additional information like “it seems that we have a problem with these two steps; the rest of the steps doesn’t have noticeable degradation.”

Automatic continuous profiling

If you have a pool of servers with services that sometimes suffer from accidental performance drops, you can try to profile them automatically. If the overhead of such profiling is too big, you can randomly profile only a part of the pool. For example, pick 10% of the servers and profile them for 30 seconds, then pick another 10%, and so on. You can play with the exact numbers and get a profile snapshot at the moment the problem reproduced (maybe it will not be on the first try). The randomized approach helps to reduce the profiling overhead on your production system.

Try to come up with your ways to automate routine. You should manually do only work that cannot be automated and requires creativity. If a series of performance investigations has common parts, you should try to automate these parts. It allows saving the time of developers and simplifying the investigation process for people who don’t have distinctive performance skills.

Summing Up

Let’s summarize. Our main problem: sometimes we have performance degradations. If we understand what “performance degradation” means well, we can try to prevent accidental performance degradations (Goal 1). Unfortunately, we can’t prevent all of them, so we want to detect not-prevented degradations in time (Goal 2) and detect other kinds of performance problems (Goal 3). We also want to reduce Type I error (false positive: there are no degradations, but we detect “fake” problems) rate (Goal 4) and Type II error (false negative: nondetected degradations) rate (Goal 5). Everything that can be automated should be automated (Goal 6).

Now we know our problems and goals. It’s time to learn what kinds of performance tests we can choose.

Kinds of Benchmarks and Performance Tests

Cold start tests: situations when we care about startup time

Warmed-up tests: situations when an application is already running

Asymptotic tests: tests that try to determine the asymptotic complexity (e.g., O(N) or O(N^2))

Latency and throughput tests: instead of asking “How much time does it take to process N requests?”, we ask “How many requests can we process during a time interval?”

Unit and integration tests: if you already have some usual tests (which are not designed to be performance tests), you can use the raw durations of these tests for performance analysis

Monitoring and telemetry: looking at the production performance in real time

Tests with external dependencies: tests that involve some part of the external world that we can’t control

Other kinds of performance tests: stress/load tests, user interface tests, fuzz tests, and so on

All of these kinds can be applied not only for performance testing but also for regular benchmarking. Let’s start with the cold start tests.

Cold Start Tests

Method cold start

When you run a method for the first time, a lot of time-consuming things may happen on different levels: from JIT compilation and assembly loading on the runtime level to some first-time calculations for static properties on the application logic level.

Feature cold start

Difference between cold and warm time for a method can be negligibly small. However, it can be noticeable when we are talking about thousands of methods and many assemblies. Because of that, a user can experience delays when he or she launches a feature for the first time (especially if this feature involves tons of methods that were not invoked before).

Application cold start

Startup time is important for many kinds of applications. And it’s definitely crucial for desktop and mobile applications. The perfect situation is a situation when the user instantly gets a ready application after a double-click on a shortcut (or launching it any other way). Any delay can make him or her nervous. Imagine a situation when you should quickly make a few edits in a file. You open it in your favorite text editor and… . And you have to wait a few seconds until the text editor is initialized. If you edit files often and close the editor each time, these few seconds can be irritating. For some people, startup time is critical; they might prefer a pure-featured text editor that starts instantly over a full-featured text editor that starts in a few seconds.

OS cold start

If your benchmark interacts with different OS resources, a physical restart can be required for a cold start test.

Fresh OS image

Sometimes it’s not enough to reboot the operating system; we may need a fresh image of the system. The old test runs can make any changes on the disk that can be important for subsequent launches. For example, Rider uses a pool of TeamCity agents for running hundreds of build configurations with tests every day. TeamCity refreshes the agent images once per several days: then the fun begins. Sometimes, we have a significant performance difference between the last (warmed) test run on the old image and the first (cold) test run on the new image (without any changes in the source code base). We don’t use a fresh OS installation each time, because such approach has a huge infrastructure overhead and the described problems are not frequent.

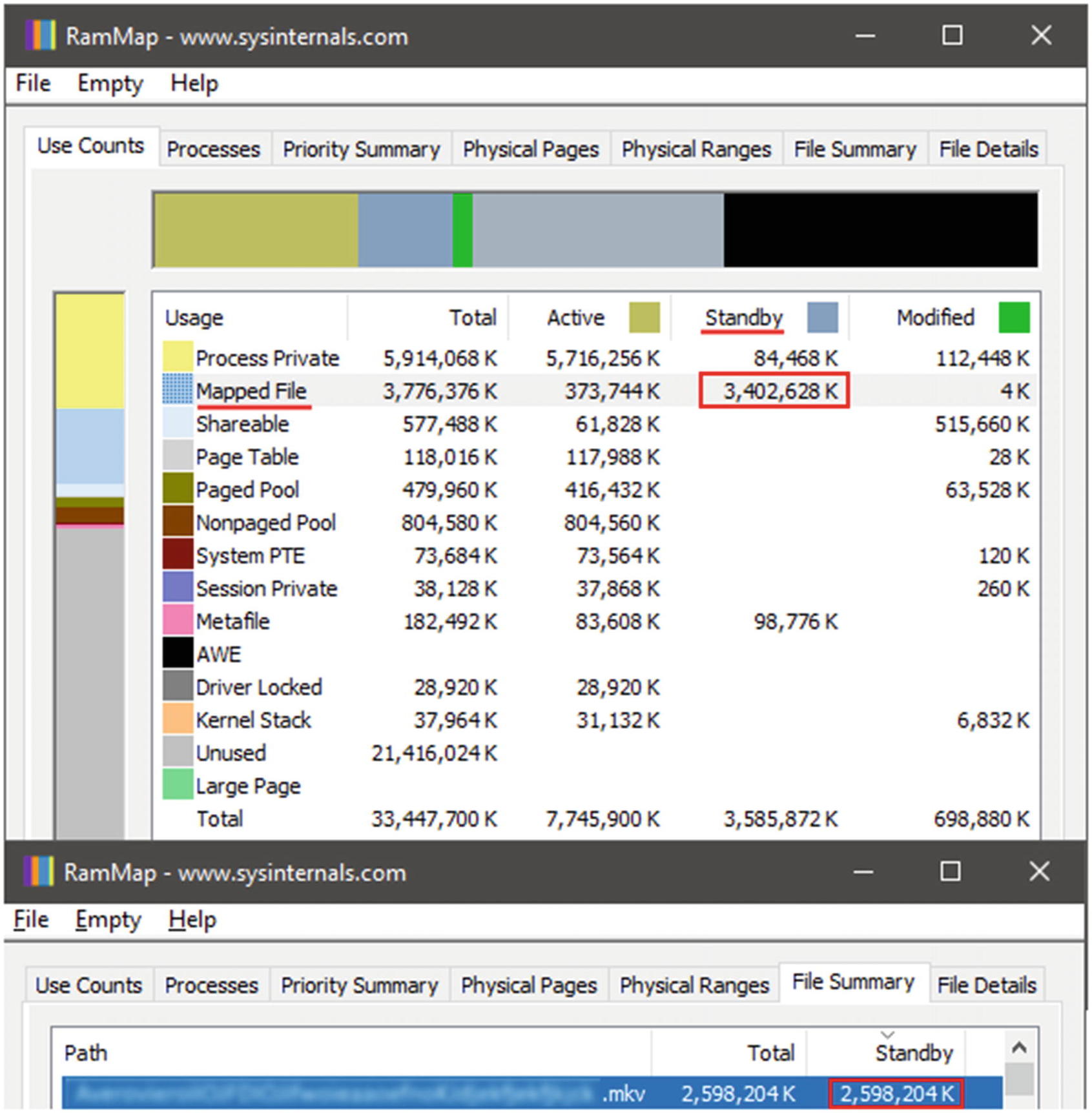

RAMMap shows huge “Standby” memory use for closed file

How is this possible? We closed the player; there are no more applications that use this file. Why do we see it in RAMMap? And what does “Standby” mean?

You can imagine the “Standby” category as a memory cache. After closing the player (which loaded the whole movie file into main memory), there is no need to clear the memory instantly. We can mark this memory as “free” (thus, you will not see it in the Task Manager as a part of “usual” memory) and clear it later when another application asks for additional memory allocation. However, if we decide to watch the movie again, the video player can reuse the file from the “Standby” list. The startup will be faster because we don’t have to load the file into memory again. On the one hand, it’s great: we have better performance for all player launches except the first one. On the other hand, it’s harder to write a performance test or a benchmark for the player cold start. In this specific case, you can manually clear the “Standby” list.5 However, it’s hard to track all the resources that can be reused in the general case and manually clear these resources each time. The system reboot is a universal way to achieve a sterile environment for an honest cold start.

When you run a performance test (or a benchmark) for cold start, you should clearly understand what exactly should be “cold.” In most cases, you have to restart the whole application or even reboot OS before each iteration. This is not always an acceptable way (because each iteration takes too much time), so programmers are looking for other solutions that allow making the environment cold without “heavy” restarts. For example, you can clear OS resources via native API instead of OS restarting or perform each method invocation in a new AppDomain instead of restarting the application.

Warmed Up Tests

It’s always hard to write cold start tests because it’s impossible to run several iterations in a row: you have to restart the whole application (or even the operating system) before each iteration. It’s much easier to write warmed-up tests, and it’s more popular because in many applications (especially for web services), you usually don’t need to care how long startup takes; the performance of a warmed application is more interesting.

However, correct warmed-up tests also require some preparation. The most important thing is the absence of side effects: all iterations must start from the same state. Unfortunately, most of the benchmarks spoil the environment, so the environment has to be recovered. There are several common ways to achieve it.

Such approach can be fine for macrobenchmarks (if we sort tons of elements), but in the case of microbenchmarks (let’s say list.Count < 100), we can get big errors because of these interrupts between stopwatch measurements. In Chapter 2, we discussed that we should use many iterations for microbenchmarks because the Stopwatch resolution is not enough to handle nanosecond operations: if we try to measure the duration of a single ListSortBenchmark call, the ElapsedMilliseconds will have an inaccurate value. In the preceding example, the loop multiplies the error instead of reducing it! Moreover, IterationSetup calls between measurements can produce additional side effects. For example, if this method allocates memory, it can cause a sudden garbage collection during the measurements.

Next, you can get Duration(Run) as Duration(SetupRunCleanup) - Duration(SetupCleanup). This trick is not always successful (especially if Setup and Cleanup allocate many objects and have complex performance distributions), but it usually works for simple cases.

Another factor that can affect the benchmark is the CPU cache. The effect of this cache on the program is simple: the recently read data can be read much faster than data that hasn’t been read by anyone for a long time. In ListSortBenchmark , we should choose the optimal strategy for the CPU cache state. When you sort the array for the first time, CPU loads the list content (or a part of the list in the case of a huge list) into the cache. Next iterations will be faster because we already have the elements (or some of the elements) in the cache. Here we should choose between a cold and a warm state for it. The decision depends on how you are going to use the Sort method in the real application. If you work with elements before sorting, you get a warm list: everything is OK with the benchmark because it also uses the warmed list. If you don’t touch the elements before sorting, you get a cold list in real life. In this case, the benchmark requires cache invalidation in the setup method as well (we will discuss how to do it in Chapter 7).

The approach also has its own problems. Given how those lists are created, there is a high tendency for those objects to live in approximate sequential memory; therefore all the CPU cache pollution is not enough to not skew the results. A better approach for that kind of test is to create all the lists and ensure that the amount of memory used by those is higher by at least 10× the maximum size of the CPU total cache available. Then we should create another list with a random uniform distribution of numbers and iterate over that list to get the indexes. As you are always running the same sequence, the memory effects would be reduced to the index list (therefore diminishing its impact on the benchmark results) and at the same time ensuring a uniform distribution cache pollution. We will discuss more details about this topic in Chapter 8.

We want to know the duration of list.Add .

Actually, we want to gain knowledge of the list.Add duration and use it for solving a real problem (e.g., writing a fast algorithm). The solution of the problem is our “true” goal, but not the knowledge itself. This is important because the correct way to benchmark list.Add depends on how you are going to use it.

We want to add many elements in a list and want to know how much time does it take.

In this case, we probably have to benchmark the addition of N elements instead of a single one. Remember that not all of the Add calls are equal: some of them can produce resizing of the internal array. You can play with the initial state, the initial capacity, the number of elements, and so on. If you want to know the duration of the adding of N elements, you should benchmark this. The performance cost of a single Add is useless for you because you can’t multiply it by N (in the general case) to get the result.

We are going to make a few edits in the Add implementations and check for performance improvements/degradations.

Any performance changes in the Add method will also affect the performance of the Add/RemoveAt pair. It will be hard to say something about how much the edits affect the Add method (quantitative changes), but we can say is it better or worse (qualitative changes). Also, we still have to check cases with the resizing of the internal array carefully.

We are going to use a list as a stack (with Push/Pop operations) with the known maximum capacity and want to know the duration of the “average” operation.

In this case, the Add/RemoveAt benchmark is a great solution because there is no difference between Add and RemoveAt here: we have to measure these methods together.

As you can see, everything depends on the goal. There are many ways to use quick operations like list.Add, but the algorithm performance depends on how you use it. Typically, you can’t get the “reference” operation duration, because this duration depends on the use case. Always ask yourself: why do you want to get knowledge about method performance? How are you going to use this method?6 If you answer these questions first, it will help you to design a good benchmark and decide when you need a cold start test and when you need a warmed-up test (or a combination of the two).

Asymptotic Tests

Sometimes it’s impossible to run all tests on huge data sets. But we can run them on several small data sets and extrapolate the results.

Let’s consider an example. In IntelliJ IDEA, there are a lot of code inspections (as in any IDE). From the user’s point of view, an inspection is a logic that shows a problem with your code (from compilation errors and potential memory leak to unused code and spelling problems). From the developer point of view, an inspection is an algorithm that should be applied to the source code. Different algorithms are independent and don’t affect each other. When IntelliJ IDEA analyzes a file, it applies all inspections to each file. Since there are so many inspections, they should be efficient. Even a single nonoptimal inspection could be a reason for performance problems in the whole IDE.

Well, how should we choose which inspection is “nonoptimal”? There is a simple rule: a proper inspection should have an O(N) complexity where N is the file length. If the inspection complexity is (N^2), we will get a performance problem with huge files.

Portability

Results almost always don’t depend on hardware: we should get the same result on slow and fast computers.

Benchmarks take less time

The inspection performance impact can be noticeable only in huge files. There are hundreds of inspection; we have to wait too long until we benchmark each inspection on each huge file from the test data. The asymptotic approach allows getting reliable results in less time. We can apply an inspection to a few small files, measure the analysis durations, and calculate the asymptotic complexity. Thus, we can check that the the inspection works fast enough without using huge files.

Many iterations

We can’t build a regression model with one or two iterations. We have to run many iterations if we want to build a reliable model that produces correct results.

Complicated implementation

It’s not easy to build a good regression model. If you are lucky enough, your performance function is polynomial. If you are not lucky, the performance function can’t be approximated by an analytic function. Even if the function type is known (and you have only to find the coefficient), it’s not always easy to build such model with a small error.

Thus, asymptotic analysis is not a silver bullet for all kinds of benchmarks, but it can be extremely useful when we want to get measurements for huge input data and we don’t want to wait too long .

Latency and Throughput Tests

(A) “How much time (T) do we need to process N requests?”

The metric here is the latency of processing of N requests (the time interval between the start and end of processing).

(B) “How many requests (N) can we process in the fixed time interval T?”

The metric here is the processing throughput. Such case is also called capacity planning or scalability analysis .

(A) In the first case, N is fixed. Thus, we have to do N iterations and measure the time between start and finish:

// Latencyvar stopwatch = Stopwatch.StartNew();for (int i = 0; i < N; i++)ProcessRequest();stopwatch.Stop();var result = stopwatch.ElapsedMilliseconds;

(B) In the second case, T is fixed. We don’t know how many requests can we process, so we will process requests until the time is over. In real life, it’s typically complicated multithreaded code, but we can write a very simple single-threaded benchmark:

// Throughputvar stopwatch = Stopwatch.StartNew();int N = 0;while (stopwatch.ElapsedMilliseconds < T) {N++;ProcessRequest();}var result = N;

If we have a linear dependency between N and T, there is no difference between these approaches . However, the difference can be huge if the dependency is nonlinear.

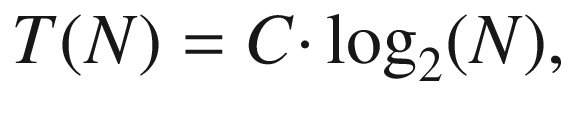

T = C · log2(N) Dependency for C=2 and C=4

N | log2 (N ) | T C = 2 | T C = 4 |

|---|---|---|---|

32 | 5 | 10 | 20 |

64 | 6 | 12 | 24 |

128 | 7 | 14 | 28 |

256 | 8 | 16 | 32 |

512 | 9 | 18 | 36 |

1024 | 10 | 20 | 40 |

Imagine that a manager asks you about the performance drop: “How much slower does it work now”? Further, imagine that he or she is not a very good manager and doesn’t want to hear anything about nonlinear dependencies and logarithms7; you should provide a single number as an answer.

(A) Let’s check how much time (T) it takes to process N = 1024 requests. When C = 2, T = 20sec. When C = 4, T = 40sec. The performance drop is 40sec/20sec or 2x.

(B) Let’s check how many requests (N) we can process in T = 20 seconds. When C = 2, N = 1024. When C = 4, N = 32. Performance drop is 1024/32 or 32x.

So, what’s the answer? 2x or 32x? Well, there is not one single correct generic answer. If you want to describe a situation in a general case, you should provide the model (T = C · log2(N) in our case) as an answer. If you want to describe a specific case, you should clearly define the case.

Usually, the target metric depends on your business goals. If the business goal is “Process N = 1024 requests as fast as possible,” you should use the “latency approach” (A). If the business goal is “Process as many requests as possible in T = 20sec,” you should use the “throughput approach” (B). If you have other business goals, you should design a set of benchmarks or performance tests that correspond to your goals. “Correspond” means that you measure the target case and use the correct set of metrics.

If you look at Table 5-1, you may think that capacity planning (the “throughput approach”) is similar to asymptotic analysis. This is not always true. Asymptotic analysis requires several measurements for building the performance model. Capacity planning can be implemented with a single measurement. However, you can use asymptotic analysis for capacity planning: the knowledge of T values for N = 32, … , 1024 allows predicting T for huge N like 2048, 4096, 8192, and so on without actual measurements.

Unit and Integration Tests

Some people are afraid of performance testing because it looks too complicated: they should make a lot of preparation (especially for cold/warm/stress tests), choose correct performance metrics, probably do some tricky math (especially for asymptotic analysis), and so on. I have some good news: if you have “usual” integration tests, you can use them as performance tests! There are many kinds of test classifications. In this book, we will use the term “integration test” for all not-unit tests: functional tests, end-to-end tests, component tests, acceptance tests, API tests, and so on. The main property of such tests that is important for performance testing is duration: the integration tests usually work much longer than instant unit tests. In fact, you can use any of your tests (even “usual” unit tests), which takes a noticeable amount of time (let’s say more than ten milliseconds). If a test takes several microseconds or nanoseconds, we can’t use it “as is” because the natural errors are too big; we have to transform such tests into “true” benchmarks. If a test takes more than ten milliseconds (or even several seconds or minutes, it’s much better), we can try to use it as a performance test without additional modifications.

It may sound strange because we don’t control accuracy for such tests, we don’t do many iterations, we don’t calculate statistics, and we don’t do anything that we usually do in benchmarking. These tests were designed to check the correctness of your program, not performance. It seems that raw duration of unit and integration tests can’t be used in performance analysis.

To me, it sounds strange to have so many performance data and don’t use it. Yes, errors are huge, accuracy is poor, results are unstable, everything is terrible. But this doesn’t mean that we can’t try to use it. In performance tests, every iteration is expensive because it consumes the CI resources and increases our waiting time. From the practical point of view, a good suite of performance tests is always a trade-off between accuracy and the total elapsed time. The unit and integration tests will be executed anyway because we have to check the correctness of the business logic. We will get the duration of these tests anyway without additional effort. It’s also a performance data. Moreover, it’s a performance data that we have for free. If it’s possible to get some useful information from this data (somehow), we should definitely do it!

Explicit performance tests

These tests were designed to evaluate performance. Explicit tests may require special hardware and tricky execution logic (with warm-up, many iterations, metrics calculation, and so on). The result of such test is a conclusion about performance (like “the test works two times slower than before” or “the variance is too huge”).

Implicit performance tests

These tests are “usual” tests that are designed to check logic. Each run of such tests has a duration, its performance number, which we get as a side effect. The result of such a test is a conclusion about correctness (green status for correct logic and red status for incorrect logic). “Implicit performance tests” means that these tests are not designed as performance tests, but we still can use them as such.

“Mixed” performance tests

It may sound obvious, and we will not discuss such tests in detail, but I still have to highlight this idea: you can check logic and performance at the same time. For example, we can write a huge integration stress test that covers the most performance-critical pieces of our code. Such a test can check that everything works correctly even under load (some race conditions can appear in such situations) and that we don’t have a performance regression in such a case.

Persistent CI agent

When we measure performance, it’s a good idea to run performance tests on the same hardware each time. It’s very hard (or sometimes impossible) to evaluate the performance impact of your changes when you compare the “before” performance data from one agent with the “after” data from another agent. It’s always better to have persistent CI agent (or set of agents) for explicit performance tests. This is not mandatory, but it’s highly recommended. In case of implicit performance tests, there is no such requirement8; they should work correctly on any agent.

Virtualization

Virtualization is a great invention that helps us to organize a flexible cloud infrastructure. However, a virtual environment is a poison for the accuracy of explicit performance tests. You never know who else is running benchmarks on the same hardware at the same time. Explicit performance tests usually require a dedicated real (not virtual) agent. Implicit performance tests should work correctly in any environment.9

Number of iterations

Most explicit performance tests require several iterations. Remember that performance of a method is not a single number; it’s a distribution. We can’t evaluate errors and build a confidence interval if we have only one iteration. And we can’t compare two revisions if we don’t know errors and variance. Of course, sometimes a test can be too expensive (it consumes too much time), so you can’t afford to run it several times. Implicit performance tests typically need only one iteration.10

Writing easiness

It’s easy to write implicit performance tests.11 I mean that every method which somehow calls your code can be a test. Different teams have different standards of coding, but most of them agree that the source code should be covered by tests. Some good development practices require writing tests (e.g., before writing a bug fix, you should write a red test for this bug and make it green with your fix). Typically, you get tests as an “artifact” of the development process. You write tests because it will simplify your life in the future and make you more confident in the quality of your code. Most of the unit tests are deterministic: a test is red, or a test is green. Moreover, it’s usually obvious when a test is green. If you are writing a method Mul(x,y) that should multiply two numbers, you know the expected output. Mul(2,3) should be 6. Not 5, not 7; there is only one correct answer: 6. When we are writing explicit performance tests and making performance asserts, it’s always complicated. For example, yesterday Mul took 18 nanoseconds; today it takes 19 nanoseconds. Is it a regression or not? How should we check it? How many iterations do we need? How should we evaluate errors? And the most important question: is the test red or green? If you have clear answers to all questions about performance asserts, ask your teammates about it. Are you sure that you have the same point of view? It’s so hard to write performance tests because there are no strict rules here. You should come up with your own performance asserts that satisfy your performance goal. It’s hard because there is no “absolute green status,” and there is no single “correct” way to write “performance asserts.” There are only trade-offs.

Time of execution

Speaking of trade-offs, the most interesting one is between accuracy and the execution time. Performance tests wouldn’t be so fun if we had unlimited amount of time. I wish I could perform billions of iterations for each of my benchmark or performance tests. Unfortunately, the world is cruel, and we don’t have such opportunities. There is the natural upper limit for the total execution time of a test suite. It can be 10 seconds, 10 minutes, 2 hours, or 5 days: it depends on your workflow. But you have this limit anyway; you can’t spend months and years for a single suite run. It would be great if you could run all of your performance tests during a few hours. If the total time is limited and you have too many tests, you can afford the only small number of iterations. It can be 100 iterations, or 10 iterations, or even a single iteration. And sometimes you have to deal with this single iteration. Implicit performance tests should be as fast as possible, there is no reason (typically) to repeat the same thing over and over. In the case of the explicit performance tests, each additional iteration can increase the accuracy. Of course, there is a “desired” level of accuracy and a “recommended” number of iterations. Usually, it doesn’t make sense to “pay” for additional iterations by execution time after that point.

Variance and errors

Since the explicit performance tests are designed to get reliable performance results, we do everything to stabilize them: use real dedicated hardware, make many iterations, and calculate statistics. In case of the implicit performance tests, we (typically) don’t care about variance and errors: we can run it inside a virtual machine, we can choose a new CI agent each time, we can always do only one iteration, and so on. Variance and errors are typically huge.

Well, does it make any sense to analyze the performance of “usual” tests (a.k.a. implicit performance tests) if it’s so unstable? A general answer: it depends. A more specific answer: you will never know if you don’t try. In the “Performance Anomalies” section later in this chapter, we will discuss many approaches that can be easily applied to implicit performance tests. When you work with a huge code base, it’s impossible to cover all methods by performance tests: you don’t have enough time and resources. However, if someone made a simple mistake (most of the mistakes are simple) and get a huge performance regression (most of the regressions due to simple mistakes are huge), you can easily catch it with your “usual” unit and integration tests (if you use them as implicit performance tests).

Monitoring and Telemetry

Monitoring

Monitoring is a typical solution for web servers: we can watch for life indicators of the server with the help of special tools like Zabbix12 or Nagios.13

Telemetry

Telemetry is a widely used technology in software development14 that allows collecting information on the usage of user applications. Such data is typically anonymous and doesn’t include any sensitive information. However, it can include important information about the performance of different operations. While usual monitoring is a great approach for web services, telemetry is our main “monitoring” tool for desktop applications (however, it can also be useful for the client side of web services). There is an existed API for telemetry by Microsoft,15 but we can implement our own set of tools.

For example, Mozilla Firefox collects data16 about memory usage and operation latencies.

Of course, telemetry can include only general usage data without any performance statistics. For example, .NET Core CLI Tools use17 telemetry for collecting information about .NET Core SDK usage.18 The collected telemetry datasets are open and available for everyone, but they don’t include any information about performance.

Common trends

It’s hard to perform a precise analysis, but you can track common trends. For example, you can compare statistics (like average, p90, p99, and so on) of a web page load duration on the previous week (with the previous version of your web service) and the current week (with an updated web service version). If you see a statistically significant difference, it’s a reason for a performance investigation.

Thresholds

If you have a low latency requirement for some operations, you can introduce thresholds and send telemetry data in cases of failure. Imagine that you develop a desktop application and you want to keep the startup time low. Let’s say that 1 second on modern hardware (you can collect information about the hardware as well) is your upper limit. Of course, a user can have some heavy processes running at the same time, so let’s say that the threshold is 2 seconds. If the startup time is more than 2 seconds, a telemetry alarm should be sent. Probably, you will get a few such alarms every day because you can’t control the user environment. However, if you start getting dozens or hundreds of such alarms after the publishing of a new version, you have an issue for investigation.

Manual watching

It’s hard to predict all the things that can go wrong. It’s even harder to automate the analysis of performance plot and write a system that automatically notifies us about all suspicious things. We will talk about performance anomalies later in this chapter. Thus, it’s a common practice when a special person (or a group of people) are looking for performance charts. Popular services require 24/7 monitoring: in case of any problems (not only performance problems but also availability and business logic issues), the reaction must be immediate. Unfortunately, it’s almost impossible to automate this process. But you can use dashboards and alarm systems to make life easier.

Tests with External Dependencies

External services

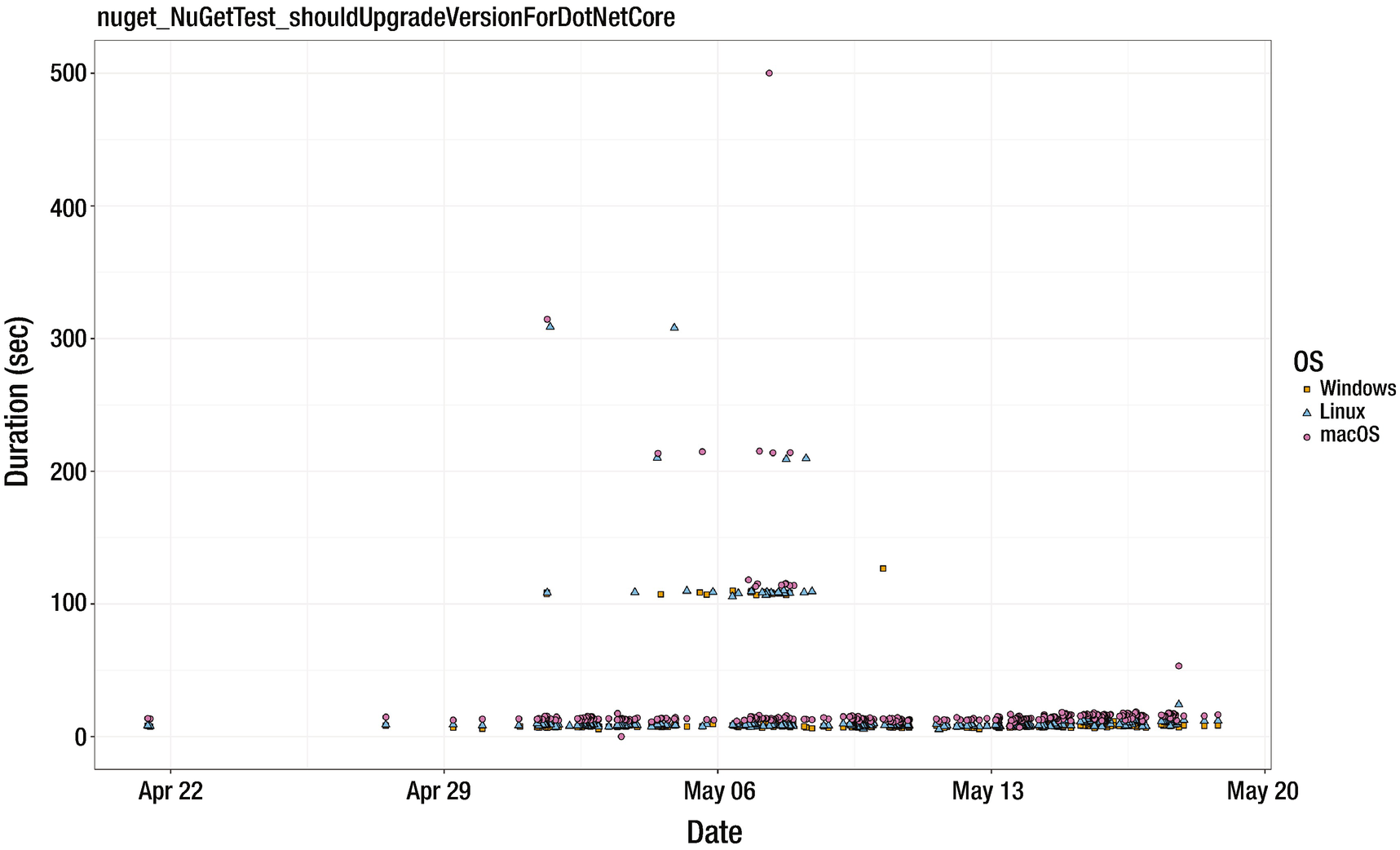

In Rider, we have some tests that cover NuGet features like install, uninstall, or restore. The logic of the test is simple: we just check that we can correctly perform these operations in small and huge solutions. Most of the tests are using our local NuGet repository, but some of them are using the nuget.org and myget.org servers. The primary goal of these tests is checking that the logic is correct, but we can also use it as performance tests. In Figure 5-2, you can see a typical performance plot for one of our NuGet tests. On March 22, 2018, nuget.org was down (see [Kofman 2018]). On April 16, 2018, api.nuget.org was blacklisted in Russia.19 On May 6, 2018, there were some serious problems with search API in the NuGet Gallery (see [Akinshin 2018]). We learn about these incidents immediately because we are watching the performance plots all the time. On the one hand, it’s hard to use such tests for honest performance regression testing: we get false positive results (a performance test is red, but there are no changes in the code base). On the other hand, all these problems are relevant to the behavior that users have in the product. It’s good to be notified about it as soon as possible.

External devices

Many years ago, I was involved in an interesting project. My colleagues and I worked on a program that communicates with OWEN TRM 138.20 This is an industrial measurement device with eight channels that can measure different characteristics, such as temperature, amperage, and voltage. If you connect it to eight different points of a machine detail and measure the temperature at these points, the program can extrapolate the data and build a 2D map of the temperature surface. Everything should work in real time: if the user changes some connection points, the map should be recalculated instantly. The real-time visualization was an important feature, so we checked that the time intervals between changes in the experimental setup and a new visualization. Unfortunately, sometimes we experienced unpredictable delays: OWEN TRM 138 provided data a few seconds late. Thus, it was almost impossible to make reliable performance measurements (because the delays were unpredictable). Eventually, we stopped to measure the whole cycle and started to measure different stages: fetching data, extrapolating, building an image, and so on. It solved the problem because measurements of the device-independent stages were pretty stable.

Performance plot of a NuGet test in Rider

The general advice: if you have some parts of the external world that affect your performance and you can’t control it, try to isolate it. It’s still nice to see the whole picture and get the performance distribution of the whole operations (monitoring/telemetry), but you can’t build reliable performance tests on top of it. For such stages, you should measure test stages that you can control (without any interaction with the external world).

Other Kinds of Performance Tests

There is a huge number of different approaches that can be used for writing performance tests. This section is just an overview of possible techniques; we are not going to cover all of them. However, there are a few more performance test kinds that are worth mentioning: stress/load tests, user interface tests, and fuzz tests.

Stress/load tests

You should always know the limitations of your software product. Usually, it’s a good idea to cover these limitations by performance tests. When we are talking about performance stress tests, we usually mean integration tests. Such testing is especially useful for web services that handle a huge number of users at the same time. A typical mistake for server application benchmarking is focusing only on a situation without load (we send a single request to the server and measure the response time). In real life, you have many users who send requests at the same time. The most interesting thing is that the way the server process these requests depends on the volume of these requests. Fortunately, there are existing solutions that can help to automate this process (e.g., Apache JMeter, Yandex.Tank, Pandora, LoadRunner, Gatling).

User interface tests

It’s not always easy to implement a correct infrastructure for user interface tests, because you usually can’t run it a “headless” mode; you need a “graphical environment” for such tests. For example, in the IntelliJ IDEA code base, there are some user interface tests that check whether the IDE interface is responsive. In the CI pipeline, these tests are running on dedicated agents that are connected to physical 4K monitors.

There are also many libraries and frameworks that can help you to automate testing of the interface in your product (e.g., Selenium).

Fuzz tests

We already know that the performance space is complicated and a method duration can depend on many different factors. Let’s say that there is an algorithm that processes a list of integers and makes some calculations. We implemented a faster version of this algorithm and now we want to verify that it really works faster. How should we compare them? Obviously, we can create a reference set of lists and benchmark both algorithms on each list from the set. Even if the new algorithm shows great results on all these pregenerated lists, we can’t be sure that it will always be faster than the original algorithm. What if there is a corner case that spoils the performance of the new implementation? Unfortunately, we can’t enumerate all possible lists of integers and check each of them. In such cases, we can try a technique called fuzzing. The idea is simple: we should generate random lists until we find input which causes problems. A very simplified version may look as follows:for (int i = 0; i < N; i++){var list = GenerateRandomList();var statistics = RunBenchmark(NewAlgorithm, list);if (HasPerformanceProblem(statistics))ReportAboutProblem(list);}

Fuzzing is a powerful approach used in different areas of software engineering. It can be applied even for searching for bugs in RyuJIT (see [Warren 2018] for details). If we can discover bugs in a JIT compiler that were unnoticed by developers and passed all unit tests, we definitely can try it in benchmarking.

Here is another situation : a user complains about performance problems, you know that these problems most likely relate to specific parameter values, but you don’t know the exact values that cause the problems, and it’s not possible to get information about the user setup. If you are not able to try all possible setups, you can try to find it with the help of fuzzing.

Fuzzing can be also a part of your continuous integration pipeline: you can generate new input data each time and check for unusual performance phenomena.

However, fuzzing has one important drawback. It breaks one of the main benchmark requirements: the repeatability. The fuzz benchmarks are a special kind with only one goal: to catch undesirable results. However, you still should make each run of a fuzz benchmark repeatable by saving the input data or a random seed that is used for data generation.

Summing Up

There are many kinds of benchmarks and performance tests. In this section, we discussed only some of them. To be honest, all kinds of performance tests are not exactly kinds. They are like concepts, ideas, or approaches that you can mix in any combination. For example, you can use asymptotic analysis for capacity planning for a web server in the warmed state under load. Of course, you shouldn’t implement all the discussed test categories in each product: you can select only a few of them or invent your own kinds of performance tests relevant to your problems. The main rule is simple: you should design such tests that correspond to the business goals and take a reasonable amount of time. If you write some benchmarks or performance tests, you should clearly understand what kind of problems are you going to solve. Typically, figuring out the problem takes more than half of the time that goes into finding the solution. Based on this understanding, you can choose the best techniques (or combinations of them) that fit your situation.

Performance Anomalies

In simple words, a performance anomaly is a situation when the performance space looks “strange.” What does this mean? Well, you can choose your own definition. It’s a situation when you look at a performance plot and say: “This plot seems unusual and suspicious; we might have a problem with it. We should investigate it and understand why we have such plot.”

An anomaly is not a problem that should be fixed; it is a characteristic of the performance space that you should know. All anomalies can be divided into two groups: temporal and spatial. A temporal anomaly assumes that you have a history (a set of revisions or commits) that is analyzed. For example, you can find a problem that was introduced by recent changes in the source code. A spatial anomaly can be detected in a single revision. For example, it can be based on a difference between environments or a strange performance distribution of a single test.

Degradation. Something worked quickly before, and now it works slowly.

Acceleration. Something worked slowly before, and now it works quickly.

Temporal clustering. Something suddenly changed for several tests at the same time.

Spatial clustering. Performance results depend on a parameter of the test environment.

Huge duration. A test takes too much time.

Huge variance. The difference between subsequential measurements without any changes is huge.

Huge outliers. The distribution has too many extremely high values.

Multimodal distributions. The distribution has several modes.

False anomalies. A situation when the performance space looks “strange,” but there’s nothing to worry about here.

Each anomaly subsection has a small example with a table that illustrates the problem. After that, we discuss the anomaly in detail and why it’s so important to detect it. Some of the subsections also contain a short classification of the anomaly kinds.

In the last two subsections, we will discuss problems that can be solved by hunting for these anomalies and recommendations about what can you do with performance anomalies.

Let’s start from one of most famous anomalies: the performance degradation.

Degradation

Performance degradation is a situation when a test works slower than before. It’s a temporal anomaly because you detect a degradation by comparing several revisions.

An Example of Degradation

Day | May 17 | May 18 | May 19 | May 20 | May 21 | May 22 |

|---|---|---|---|---|---|---|

Time | 504 ms | 520 ms | 513 ms | 2437 ms | 2542 ms | 2496 ms |

Performance degradation is one of the most common anomalies. When people talk about performance testing, one of the typical goals is to prevent performance degradation. Sometimes it’s the only goal (before people start to explore the performance state and discover exciting things).

Cliff

A cliff degradation is a situation when you have a statistically significant performance drop after a commit. You can see an example of the cliff degradation in Figure 5-3.

Incline

An incline degradation is a situation when you have a series of small performance degradations. Each degradation can’t be easily detected, but you can observe a performance drop when you look at the history for a period. For example, your current performance can be 2 times worse than a month ago, but you can’t point to a commit that ruined everything because there are too many commits with a small performance impact. You can see an example of the incline degradation in Figure 5-4.

Performance anomaly : cliff

Performance anomaly: incline

Of course, it’s not always easy to say whether you have a cliff degradation, an incline degradation, a mix of them, or whether you have a degradation at all. However, the difference between the cliff and the incline is important because it affects when and how you are going to detect a degradation: the cliff can be detected on a specific commit (even before a merge), and the incline can be detected during the retrospective analysis .

Acceleration

Performance acceleration is a situation when a test works faster than before. It’s a temporal anomaly because you detect acceleration by comparing several revisions.

An Example of Acceleration

Day | Apr 05 | Apr 06 | Apr 07 | Apr 08 | Apr 09 | Apr 10 |

|---|---|---|---|---|---|---|

Time | 954 ms | 981 ms | 941 ms | 1 ms | 2 ms | 1 ms |

Expected accelerations

An expected acceleration is a good anomaly. For example, you make an optimization, commit it, and see that many tests work much faster now. There’s nothing to worry about! However, it still makes sense to track such anomalies because of the following reasons:Tracking optimization impact

Even if you are sure that the optimization works, it still makes sense to verify it. Of course, you should perform local checks first, but it’s better to have several verification stages: it reduces the risk that a problem can go unnoticed. Also, you get a better overview of the features that were improved.

Team morale

However, tracking such acceleration can be good for morale in your team. When you implement a feature, you instantly see the result of your work. When you fix performance problems all the time, it can be demoralizing due to lack of feedback.21 People should see a positive impact of their work. A single performance plot with significant performance improvements can make a developer very happy.

Unexpected accelerations

An unexpected acceleration is always suspicious. You can meet a lot of developers who can say something like the following: “I didn’t change anything, but now the software works faster. Hooray!” Unfortunately, an unexpected speedup can often mean a bug. I had observed many situations when a developer accidentally turned off a feature and got a performance improvement. Such situations can pass all the tests, but you can’t hide them from the performance plots! Investigations of unexpected accelerations don’t help you with performance, but they can help you to find some bugs.

Temporal Clustering

Temporal clustering is a situation when several tests have significant performance changes at the same time. It’s a temporal anomaly because you detect it by comparing several revisions.

An Example of Temporal Clustering

Day | Oct 29 | Oct 30 | Oct 31 | Nov 01 | Nov 02 |

|---|---|---|---|---|---|

Test1 | 1.4 sec | 1.3 sec | 1.4 sec | 2.9 sec | 2.8 sec |

Test2 | 4.3 sec | 4.2 sec | 4.4 sec | 8.8 sec | 8.7 sec |

Test3 | 5.3 sec | 5.3 sec | 5.4 sec | 5.4 sec | 5.3 sec |

One of the performance testing goals is automation. A simple “you have a problem somewhere here” is a good thing, but it’s not enough. You should provide all data that can help to investigate the problem quickly and easily.

One of the ways to do it is by tracking the grouped changes. If you get 100 tests with problems after a change, it doesn’t mean that you should create 100 issues in your bug tracker and investigate them independently. It’s most likely that you have a few problems (or only one problem) that affect many tests. Thus, you should find groups of the tests that likely suffer from the same problem.

Suite degradation

Most of the projects have a test hierarchy. You can have several projects in a solution, several test classes in a project, several test methods in a class, and several input parameter sets for a method. When you are looking for performance degradation or another performance anomaly, you should try to highlight test suites22 that share the same problem.

Let’s look at an example in Table 5-5. Here we have two suites: A and B, three tests in each suite. We have some measurements before and after some changes. We have different measurement values for all tests, but some of them can be explained by natural noise. You can note that performance delta in the B suite is not significant: it’s about 1% (typical fluctuations for usual unit tests). Meanwhile, we have a noticeable time increase for tests from the A suite: around 10-18%. The fact that we got a performance degradation for all tests of the suite at the same time is a reason to assume that we have the same problem with the whole suite.Table 5-5.An Example of Suite Degradation

Suite

Test

Time (before)

Time (after)

Delta

A

A1

731 ms

834 ms

103 ms

A

A2

527 ms

623 ms

96 ms

A

A3

812 ms

907 ms

95 ms

B

B1

345 ms

349 ms

4 ms

B

B2

972 ms

966 ms

−6 ms

B

B3

654 ms

657 ms

3 ms

Paired degradation/acceleration

This is another kind of very common problem. In a suite, you often have an initialization logic. It can be an explicit setup or an implicit lazy initialization. In this case, you can have a test that works slowly not because of the test logic, but because it includes the initialization logic. Let’s look at an example in Table 5-6. As you can see, before the change all test methods take about 100 ms except Foo which takes 543 ms. After the change, Foo takes 104 ms (acceleration), Bar takes 560 ms (degradation), and other tests don’t have statistically significant changes. In such cases, we can assume that the order of tests was changed: Foo was the first test in the suite before the changes; after the changes, Bar is the first test. This is not always true, but it’s a hypothesis which should be checked. Why should we care about it? The initialization logic should always move away from the tests to a separate method. It’s not only a good practice, but it’s also important from the performance point of view. A huge deviation from the setup can hide real performance problems in the tests. Let’s do some calculations with rounded example values. If a test takes 100 ms and a setup takes 400 ms, they take 500 ms together. If we have a 30 ms degradation, this comprises 30% of the test time (a significant change) and only 6% of the total time, which can be ignored because of huge errors. If you have a setup logic inside one of the tests, it’s not a bug, but it’s a design flaw. Usually, it’s a good idea to get rid of it (if possible).Table 5-6.An Example of Suite Degradation

Test

Time (before)

Time (after)

Delta

Foo

543 ms

104 ms

-439 ms

Bar

108 ms

560 ms

452 ms

Baz

94 ms

101 ms

7 ms

Qux

103 ms

105 ms

2 ms

Quux

102 ms

99 ms

-3 ms

Quuz

98 ms

96 ms

-2 ms

Correlated changes in time series

If you can detect a correlation between two time series in your tests, it can be interesting to check that you always have this correlation. In Table 5-7, you can see an example of some latency and throughput measurements. The latency is just a raw duration, the throughput is a number of RPS. We run these tests on different agents with different hardware, so we can’t apply “usual” degradation analysis here. However, we can notice a pattern: Throughput≈2 sec / Latency. For example, if Latency = 0.1 sec, we get Throughput = 2 sec / 0.1 sec = 20. This pattern can be explained by parallelization: we have two threads on each agent that process our requests. We can observe such patterns on all agents except Agent4. So, we can assume that something is wrong with parallelization here. Of course, we can detect this problem in other ways. However, the correlation analysis helped us to formulate a hypothesis for future investigation (something is wrong with the Latency/Throughput) and get additional important information (we have this problem only on Agent4). Such facts can save a lot of investigator time because you can collect all such suspicious patterns automatically. You can find another example of such analysis in [AnomalyIo 2017].Table 5-7.An Example of Correlated Changes in Time Series

Day

Agent

Latency

Throughput

Jan 12

Agent1

100 ms

20.12 RPS

Jan 13

Agent1

105 ms

19.01 RPS

Jan 14

Agent2

210 ms

9.48 RPS

Jan 15

Agent2

220 ms

8.98 RPS

Jan 16

Agent3

154 ms

12.89 RPS

Jan 17

Agent3

162 ms

12.41 RPS

Jan 18

Agent4

205 ms

4.95 RPS

Jan 19

Agent4

209 ms

5.02 RPS

Spatial Clustering

Spatial clustering is a situation when the performance of some tests significantly depends on some test or environment parameters. It’s a spatial anomaly because you detect it with a single revision.

An Example of Spatial Clustering

Test1 | Test2 | Test3 | |

|---|---|---|---|

Windows | 5.2 sec | 9.3 sec | 1.2 sec |

Linux | 0.4 sec | 0.6 sec | 1.4 sec |

macOS | 0.4 sec | 0.7 sec | 1.2 sec |

Performance anomaly: spatial clustering

Let’s consider an example . The same version of ReSharper should work on different versions of Visual Studio (VS). For example, ReSharper 2017.3 should work on VS 2010, VS 2012, VS 2013, VS 2015, and VS 2017. The ReSharper team has a suite of integration tests that are executed on all versions of Visual Studio. It’s not a rare situation when some changes spoil performance only on a specific version of Visual Studio. Moreover, if we work only with a single revision (without performance history), we can observe that some tests work fast on VS 2010, VS 2012, VS 2013, and VS 2015 and work slowly on VS 2017. It’s a good practice to look for such situations and try to investigate them.

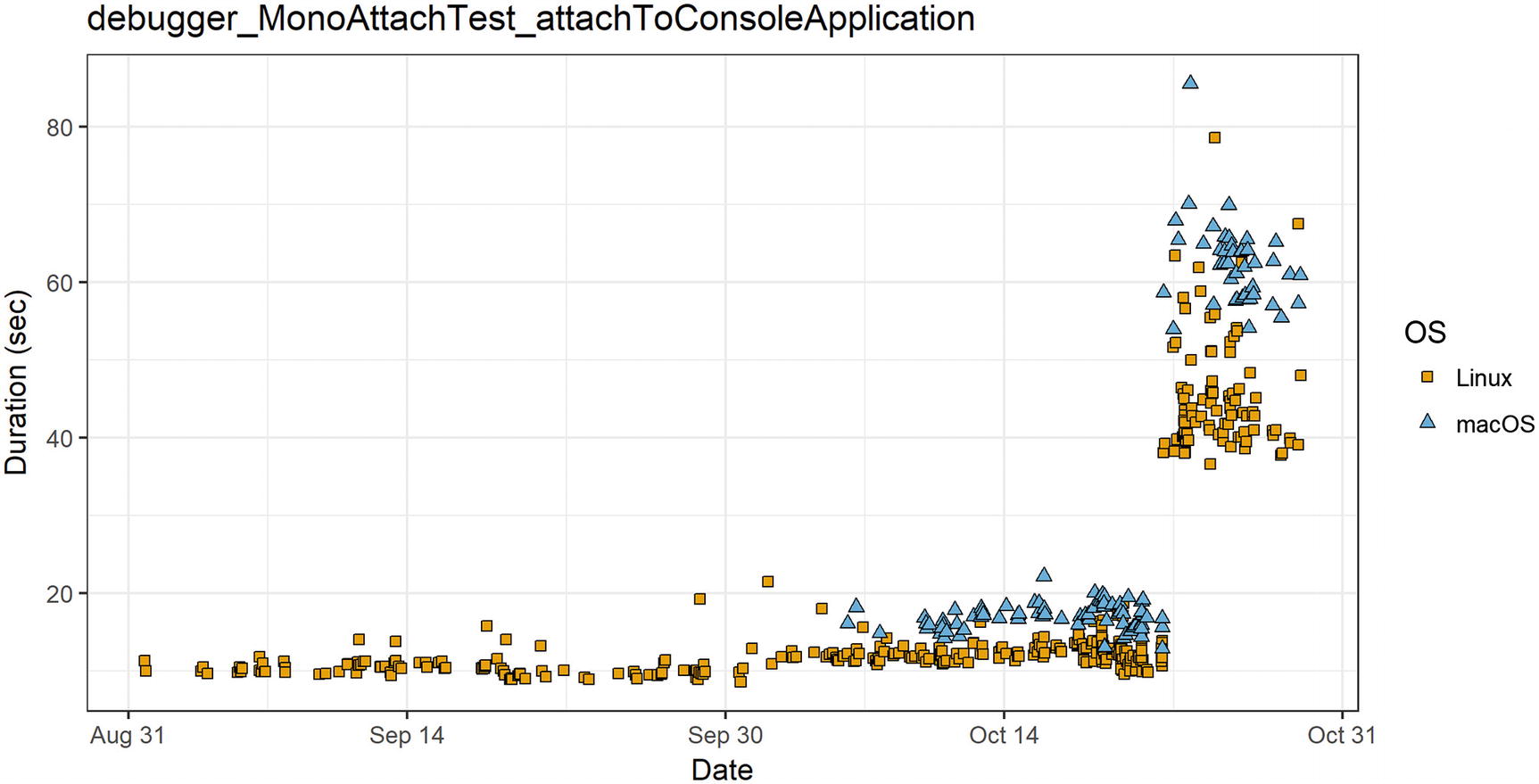

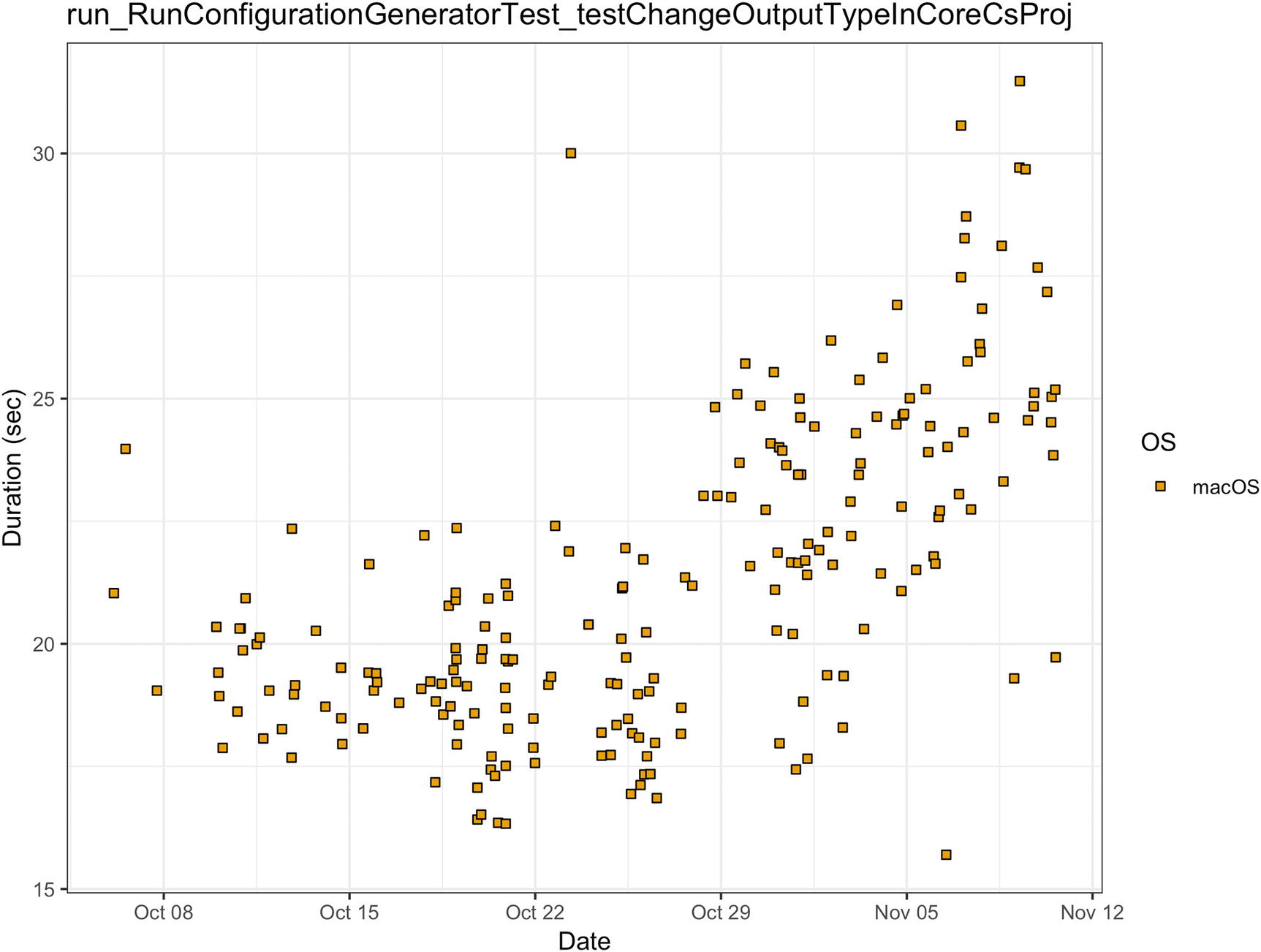

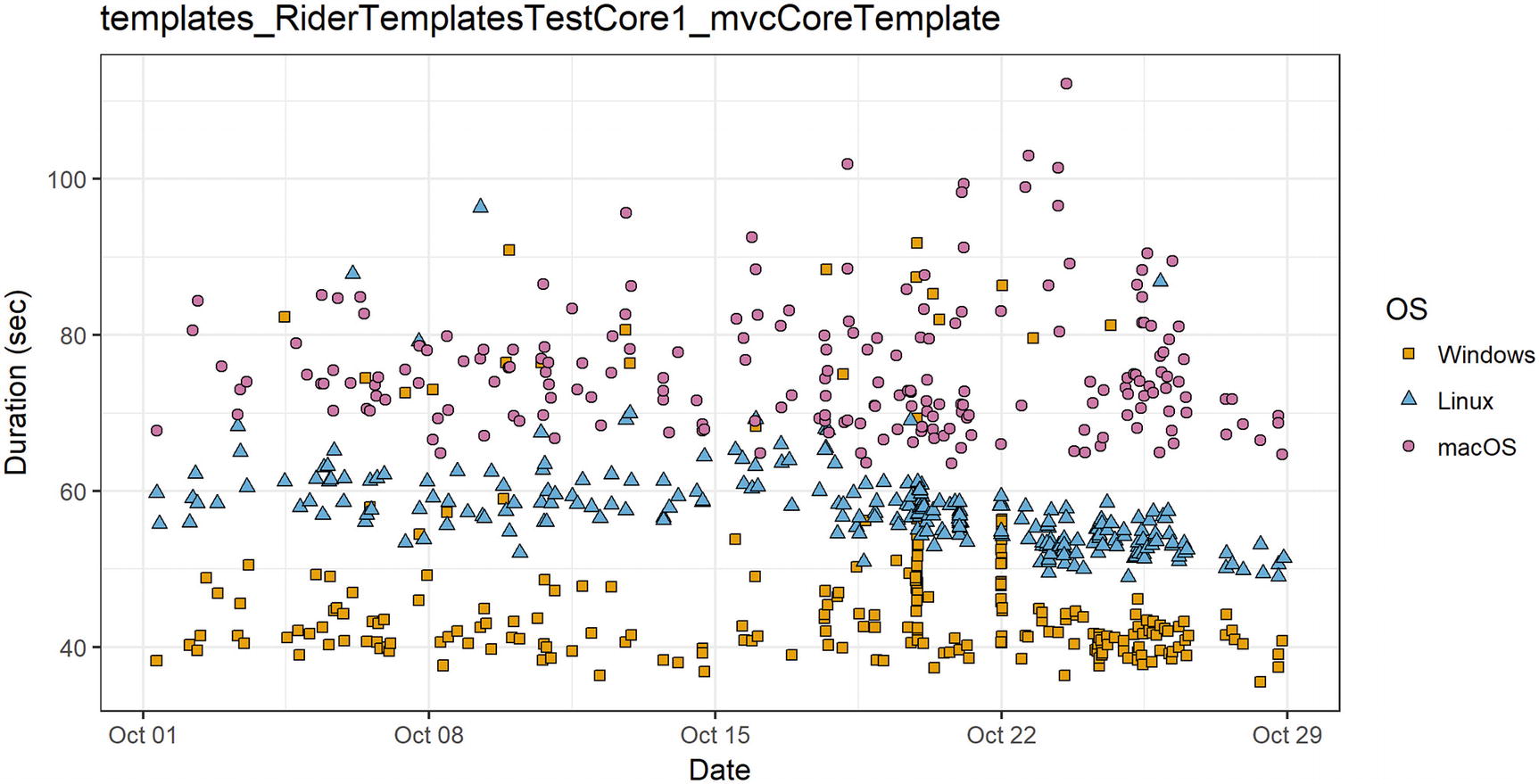

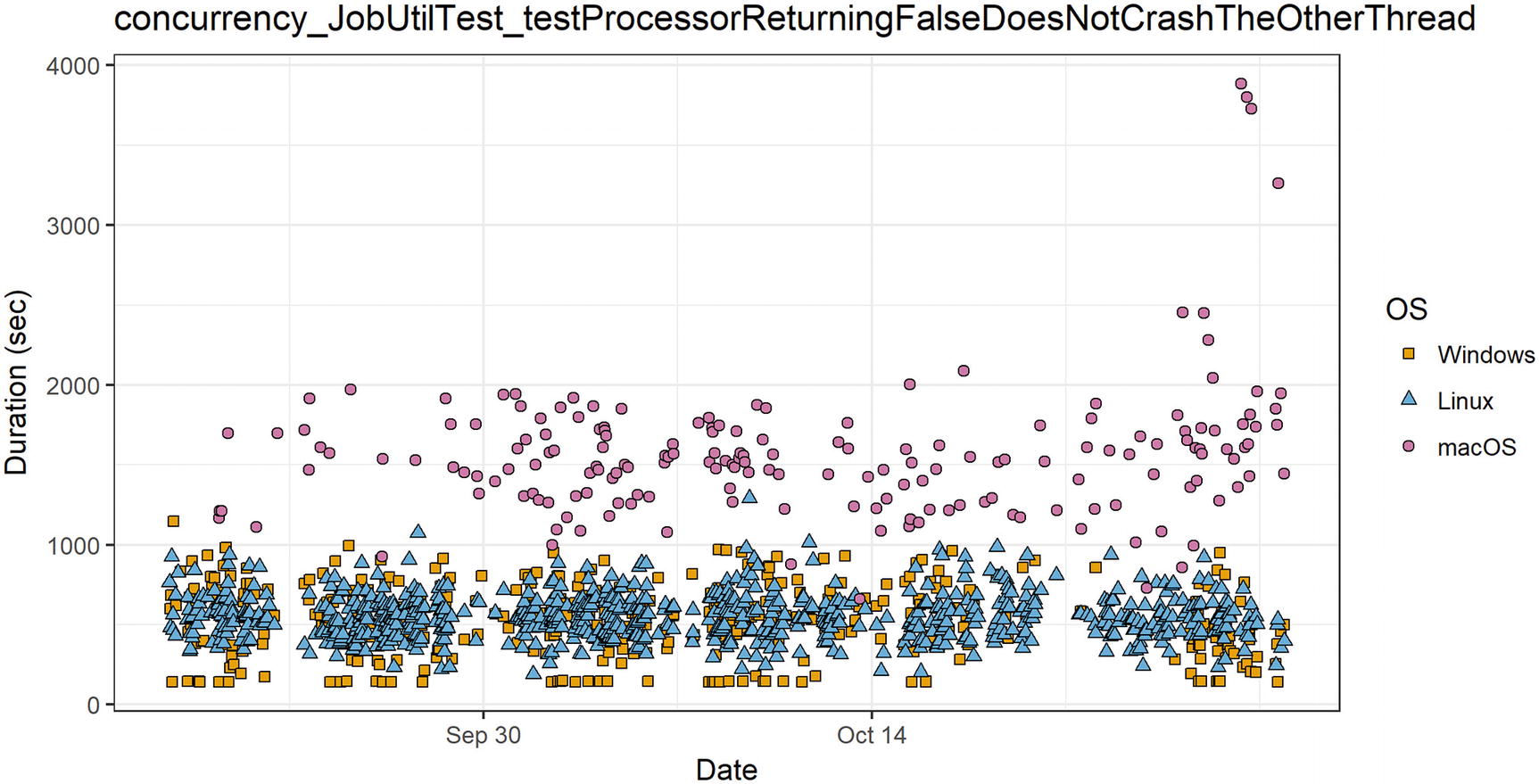

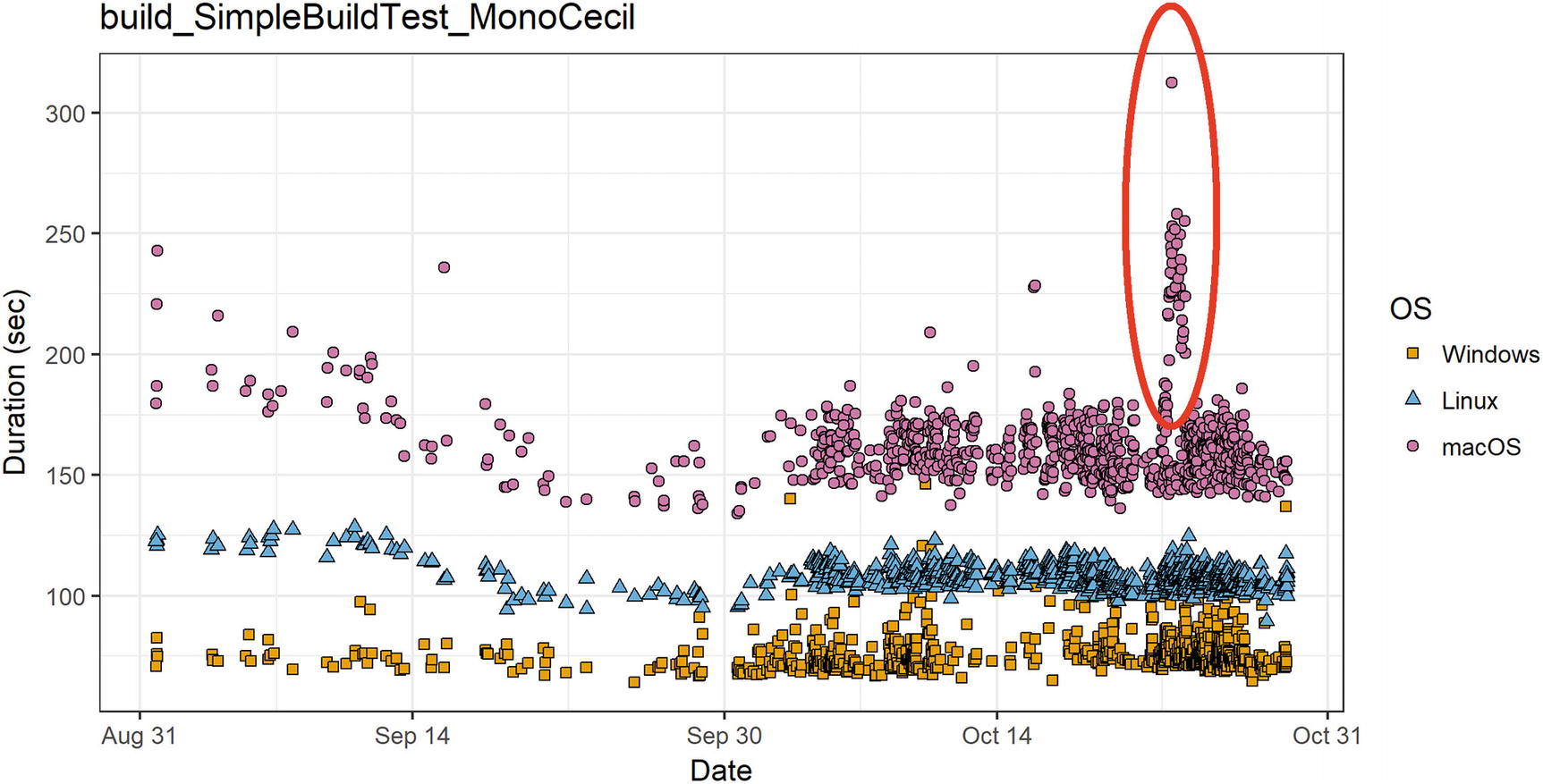

Another example is about Rider. Rider should work fast on all supported operating systems. It uses .NET Framework on Windows and Mono on Linux/macOS. Most of the tests have about the same duration on different operating systems, but some of them demonstrate huge differences. In Figure 5-5, you can see performance measurements for .NET Core ASP.NET MVC template (create a solution from the template, restore NuGet packages, build it, run the analysis, and so on). As you can see in the figure, these tests work faster on Windows than on Linux or macOS. Also, it has a huge variance, but we will discuss it in the next subsection.

The clustering anomaly can be applied to a single revision instead of a set of revisions. It doesn’t show problems which were introduced by recent changes, but it can show problems that you have right now (and had for a long time).

In Chapter 4, we discussed the multiple comparisons problem. This becomes a very serious problem when we are talking about clustering. The more parameters we consider, the more chances we have of finding a “pseudo” clustering. If you include too many parameters in the parameter set (you can include anything from the GCCpuGroup value and free disk space to times of day23 and the moon phase24), you will definitely find a parameter that ostensibly affects the performance. In this case, you can try a popular method of vector quantization from k-means clustering (e.g., see [AnomalyIo 2015]) to neural models and machine learning (some of the cauterization methods were covered in Chapter 4).

Huge Duration

Huge duration is a situation when some tests take too much time. “Too much” can be relative (much more than most of the tests) or absolute (seconds, minutes, or even hours). It’s usually a spatial anomaly because you are looking for the slowest test per revision.

Examples of Huge Duration

Place | Test | Time |

|---|---|---|

1 | Test472 | 18.54 sec |

2 | Test917 | 16.83 sec |

3 | Test124 | 5.62 sec |

4 | Test952 | 0.42 sec |

5 | Test293 | 0.19 sec |

What is the maximum acceptable duration of a single test?

What is the maximum acceptable duration of the whole test suite?

Check out the durations of tests in your project. What is the typical duration of the whole test suite? Find the slowest test (or a group of the slowest tests). Is it possible to test the same thing in less time?

It’s always great when you can run all of your tests quickly. When we are talking about usual unit tests, it’s a typical situation when thousands of tests take a few seconds. However, the situation is worse with integration and performance tests. Sometimes, such tests can take minutes and even hours.

If you are going to speed up the test suite, it doesn’t mean that you should implement some crazy optimizations. There are many examples of success stories when people significantly reduce the total test suite duration by a small change. In [Kondratyuk 2017], a developer changed localhost to 127.0.0.1 and got a 18x speedup of a test suite. In [Songkick 2012], the test suite time was reduced from 15 hours to 15 seconds by a series of different improvements. In [Bragg 2017], the test suite time was reduced from 24 hours to 20 seconds.

Run tests in parallel if possible

If you are care only about the total build time, you should try to run tests in parallel. Be careful: in this case, you will not get reliable performance results. Also, it’s not always possible to run arbitrary tests in parallel because they can work with the same static class or share resources (e.g., files on a disk).

Replace integration tests by unit tests if possibleIf you have a ready framework for integration tests, it’s usually much simpler to write an integration test instead of a unit test. Unit tests require some effort: you have to isolate a part of the system correctly, mock other parts, generate synthetic data, and so on. You typically shouldn’t do it in integration tests: the whole system with real data is ready for your checks. However, if you want to check only a single feature, a unit test is a recommended way. If you run the unit tests before the integration tests, the increased feature covering by additional unit tests can also improve the build time: in case of failed unit tests, you can skip the integration test phase .

Huge Variance

Huge variance is a situation when some tests have too much variance. “Too much” can be relative to other tests (much more than most of the tests), relative to the mean value (e.g., mean = 50 sec, variance = 40 sec), or absolute (seconds, minutes, or even hours). It can be a temporal anomaly (if you analyze a performance history) or a spatial anomaly (if you analyze several iterations for the same revision).

An Example of Huge Variance

InvocationIndex | Time |

|---|---|

1 | 2.34 sec |

2 | 54.73 sec |

3 | 5.15 sec |

4 | 186.94 sec |

5 | 25.70 sec |

6 | 92.52 sec |

7 | 144.41 sec |

Performance anomaly: variance

Huge Outliers

Huge outliers is a situation when the outliers values are too big (much bigger than the mean value) or there are too many outlier values (e.g., significantly more than before). It can be a temporal anomaly (if you analyze a performance history) or a spatial anomaly (if you analyze several test iterations for the same revision).

An Example of Huge Outliers

InvocationIndex | Time |

|---|---|

1 | 100 ms |

2 | 105 ms |

3 | 103 ms |

4 | 1048 ms |

5 | 102 ms |

6 | 97 ms |

It’s a normal situation when you have some outlier values. However, there are expected and unexpected outliers. To be more precise, there is the expected number of outliers. For example, if you do a lot of I/O operations, you will definitely get some outliers, but you will get them with the same rate for the same configurations. Different configurations can have a different number of expected outliers. If you read data from the disk, you will probably get different distributions for Windows+HDD and Linux+SSD. But you usually have the same number for a fixed configuration (for example, 10–15 outliers for 1000 iterations).

Checking the number of outlier values is a powerful technique that helps to detect additional suspicious changes. It’s OK to have outliers , but you should always understand why you have them.

Too many outliers